2014 Backblaze Datacenter Day

(11/4/2014)

2014 Backblaze Datacenter Day(11/4/2014)

|

|

On Tuesday, 11/4/2014 Brian Wilson went to visit the Backblaze Sungard datacenter located at 11085 Sun Center Dr, Rancho Cordova, CA 95670. A large crew was there to push for building and racking the very first Backblaze Vault that will end up in production (with customer data on it). Below are some pictures from the day. Click on any picture for a higher resolution original.

First picture below shows how Brian Wilson dresses too colorful to work in a datacenter full time. I guess our datacenter team dresses in black t-shirts and jeans, Brian is like a fish out of water here. From left to right that is Bryan Williams, Matt Wright, Ric Marques, Brian Wilson (me) in bright blue shirt, Dave Stallard, Sean Harris, Aaron McCormack, Ken Manjang, and finally on far right is Larry Wilke. We are standing in front of the very first Backblaze "Vault" that will accept customer data in a few days.

Below is Dave Stallard (jet lagged from Greece) drops a pod off in the "Backblaze Storage Room" at the Sungard datacenter. Notice the stack of drives Dave is leaning back against? That is a stack of 2,500 drives - each is a 3 TByte Seagates headed to a dumpster (e-Waste-Recycling), they are junk. And each one was pulled out of a chassis by hand by a datacenter tech, ONE BY ONE. They put them back in styrofoam just as a generally ok way to handle them - each "pack" is 20 drives, a reasonable weight to carry.

A close up of a stack of dead chassis and a stack of dead drives.

The above "Backblaze storage room" is outside the cooled area of the datacenter. Below is a picture of the "inside the cooled area of the datacenter" area that Backblaze has a few desks in. I called this the "Caged Office".

A picture from the end of the day in the Caged Office after the final vault pod was racked. From left to right that is: Larry Wilke's arm, in back left is Aaron McCormack, Ric Marques in center, Sean Harris with folded arms, Matt Wright facing away, Ken Manjang in red shirt. Missing from this picture is Bryan Williams who was also there.

This is inside the main area being built out with racks, think of this as a staging area. The red pods furthest away in this picture are brand new and will be deployed the next day into racks.

Everything is done at scale, here are a hundred rails to be installed in the cabinets.

The magnitude of this storage experiment called Backblaze is awe inspiring. Every single place you look ANYWHERE is stuffed with drives. New drives to go into pods, old failed drives to be disposed of, spare replacement drives of every size: 1 TByte, 1.5 TByte, 3 TByte, 4 TByte, 6 TByte. Drives everywhere.

I noticed this sign in the corner, it says "Please stack the hard drives here". So somebody put $85,000 worth of brand new shrink wrapped drives there.

Here is a little baby stack of hard drives in the corner. EVERY CORNER has hard drives.

Some drives under the work benches.

With all the cabinets full, spare pod parts (for repairs) are stored in tupperware containers stacked to the ceiling.

Parts and stuff of a huge operation.

This is the crowning achievement of this particular day: the very first "production" vault. This is the first vault that will store real customer data. It is 20 pods, each pod has 10 Gbit/sec networking on it, and every pod is in it's own cabinet. Customer data is "striped" across all 20 pods so that we can unplug and lose 3 entire pods and not a SINGLE bit of customer data is lost (and still fully accessible).

A slightly different angle of one row of the first production vault.

A close up of Vault member "06", a member of vault 1000. All vaults are numbered starting with 1,000. All "old style stand alone pods" are numbered 000-999.

Ken Manjang racking the final member pod of Vault 1000 (the first vault). Ken was in charge of today's vault project, he would coordinate which pods get rebuilt, and where the final rebuilt pods landed in the racks. Every pod in production (not just vaults) exists in a map so it can be found in the racks and racks of pods.

Here are the two server lifts that help safely deploy (rack or unrack) the 150 pound pods.

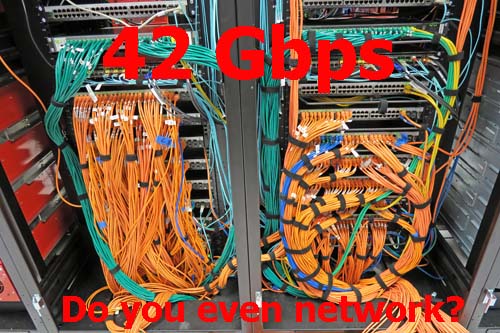

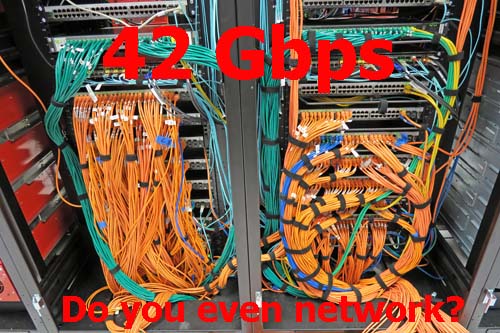

The current incoming flow of customer backups at Backblaze as of today (11/4/2014) is about 42 Gbits per second flowing into Backblaze pods. And about 1 Gbit/second flows OUT (such as customer restores being downloaded and other traffic like people browsing our blog and web pages). Most all of that traffic flows through this INSANE bundle of stuff seen below. Some of those lines are fiber optic up to 40 Gbit/sec, some are copper 10 Gbit/sec and some are copper 1 Gbit/sec lines. We have about 1,000 computers deployed (890 pods plus web servers) and so there are about 1,000 ethernet patch cords in the picture below. They all sink down through the floor and then run across the datacenter in different directions below the 20 inch raised floor.

Slightly tighter zoom in of the same thing as above. Click on the picture to see the labels on all the cables.

A picture of a "cold aisle". The cold air runs under the floor, and the floor tiles have holes that allow the cool pressurized air to flow upwards. All the fans on all the equipment "pulls" from this aisle and pushes the hot heated air into the other side (the "warm aisle").

This is a picture of the "warm aisle", notice there are no holes in the floor tiles. Also notice the enormous complexity of the power wiring and sheer number of pods the datacenter team is dealing with at all times. Even FINDING a certain pod in these stacks is difficult.

The picture below shows a rack of "restore servers". Customers prepare ZIP file restores of any number of files like 1 file or 7 million files in a ZIP file on these servers.

Below is a picture of the very first "cluster authority" we ever had at Backblaze, which is "ca000". This cluster authority handles about 500,000 hguids (individual computers) of which about 120,000 are still phoning home into this server in the last 24 days, and the base design is about 6 years old. The basic design is a Dell server with about 32 TBytes of "PowerVault MD1220" shelves attached to it. This contains the customer billing information and customer login information.

Below is a picture of the second cluster authority Backblaze ever built called "ca001". It is an EMC VNX Series with 2 TBytes of fast SSD, and currently 23 TBytes for metadata (expandable up to a factor of 4 of this), and 100 TBytes of archive (backups of the metadata). We roughly tried to build this to handle three times as many hguids (individual computer backups) as the first cluster authority of ca000 seen above. We can deploy 997 more clusters without solving very many scaling problems.

I'm pointing at pod with name "ul019" which means it is a very old pod, the 19th pod we put into production, probably in 2008 or early 2009.

When we exceed the power that is available to any one cabinet, we cannot put any more pods in that cabinet. So the datacenter team uses the dead space at the top of every rack to store spare hard drives in tupperware. See below.

Here are some Hitachi 1 TByte drives in tupperware.

Here are some Western Digital 1 TByte drives.

These are brand new pods that will be deployed tomorrow.

Bryan Williams and Larry Wilke using the server lift to move pods to the work bench where they are being rebuilt.

Larry using the server lift to move pods around.

Bryan labels every drive before doing a "chassis swap". This is where we pull every hard drive out and insert it into the exact same location in a new chassis. This solves all sorts of issues like if the motherboard has gone bad, or a power supply or sata cable is bad. In this case we are swapping from "direct wire Protocase pods" to a new pod with 10 Gbit networking on the motherboard built by Evolve.

The picture below shows a server lift in use. The lift goes under the pod adjusted to just slightly lower than the bottom of the pod. Then the datacenter tech (I think this was Dave Stallard) walks around the other side, pushes the pod onto the server lift.

Picture below taken by Sean as Ric (black shirt) was explaining the upcoming network cabling issue to me (biright blue shirt). That's Dave with the backpack.

Another picture by Sean as I'm handling a heat sink that very recently solved an issue we were having (the previous heat sink didn't fit, too tall).

Below is another picture Sean took of me (Brian in bright blue shirt) doing a "chassis swap" - all drives move from the chassis in front of Ken to the chassis in front of me. The datacenter team estimates they have done this procedure probably 500 times in the last 7 years to solve various problems.

Ken and I are laughing, probably because I'm moving too slow.

Final picture of me closing up my finished pod.

The series of pictures below show some of the cooling and power units (called "PDUs" by the datacenter) that provide enormous amounts of electrical power and cooling to the building. The entire Sungard facility is a 5 Megawatt facility, so up to 5 Megawatts of power can be drawn off the grid into this building. (Not all of that is to Backblaze computers yet.)

This is PDU-39 which seems to be something built by Liebert PPC Power Distribution Cabinet.

This is a close up of PDU-21 and the digital display on the outside.

A row of the PDUs just outside the Backblaze caged area at Sungard.

All done!